.

28 January 2015

In 2011, at the Play the Game conference in Germany, I heard Dick Pound, a member of the International Olympic Committee since 1978 and the founding President of the World Anti-Doping Agency (WADA), make some remarkable claims about the unwillingness of sports officials to police doping. Then at the 2013 Play the Game Conference, in Aarhus, Denmark, Pound made even stronger statements, including an admission that anti-doping agencies don’t really know how many athletes dope, and actually don’t really want to know.

As a scholar who studies the role of evidence in policy, the issue of doping in sport provides a fantastic case study in how science is used, and not used, in decision making. That is the subject of an essay that I have in Nature this week (below, and linked here). This companion piece at Sportingintelligence provides some additional background and data.

At the 2013 Play the Game conference, Perikles Simon, of the Johannes Gutenberg University in Mainz, and Walter Palmer, former NBA basketball player and then working for the UNI Sport PRO, provided some exploratory looks at WADA drug test data. Palmer asserted, “Does compliance with the WADA code reduce prevalence of cheating? We cannot know.”

After looking at this issue, I think he is right.

Since that Aarhus conference I have invested some effort into checking these claims by contacting WADA and the US Anti-Doping Agency and asking for data or evidence. Perhaps not surprisingly, Pound, Simon and Palmer are all correct. Anti-doping agencies are not making good use of their access to athletes and doping tests, and consequently just don’t have a good sense of the magnitude of the problem or the effectiveness of their regulations.

Here at Sportingintelligence, I’ve written a few pieces on my explorations into doping data. Last summer I looked at some data and research on athletic performance, which is suggestive of a more pervasive doping problem than sports authorities admit.Then in the fall, I reported on my interactions with WADA, when I asked for test data that would allow for research into some basic questions, foreshadowing my current piece in Nature.

Since then, I’ve engaged in a lengthy correspondence with officials at USADA, where everyone was helpful and responsive. However, like WADA, USADA does not collect or report data that would allow for research on doping prevalence or the effectiveness of anti-doping programs.

I tried to get information from USADA on the number of athletes that they actually draw from for drug tests, that is, the “pool” from which sanctions based on testing violations are reported. In any rigorous statistical analysis, knowing the population from which a sample is taken can be pretty important. USADA told me:

“The number of athletes who may fall under our jurisdiction is large, ranging from a person who may get a day license from an NGB to compete in an event all the way to the athletes that represent Team USA at the Olympics. It has been estimated that around 15 million people participate in Olympic, Paralympic, Pan American and Para-Pan American sport disciplines in the country, however, that 15 million does not represent the actual pool of athletes that is regularly part of our testing program.”

Well, narrowing down that population to between one person and 15 million people is not really useful so I pressed USADA for clarification and quantification of that “pool of athletes.” They responded:

“It should be clear that the number that you are asking for ‘the number of athletes under our jurisdiction’ is large and fluctuates. It includes a range of athletes anywhere from someone who gets a day license from an NGB to race in a local marathon, to an athlete who represents the U.S. at the Games. Quantifying the large number of people who participate in all levels of Olympic, Paralympic, Pan American and Para-Pan American sport in this country is not the focus of our testing program.”

OK I get it, USADA does not account for the population of athletes under its jurisdiction. This does not appear to be the consequence of intentionally trying to hide anything, but rather, a lack of interest in supporting the independent analysis of testing data. USADA explained: “While it appears that you are having challenges using the data for the purposes you wanted, I think it is important to point out that our goal is not just to track data for the sake of tracking data.

“We track the data we need to create the most effective anti-doping program. That isn’t being ‘intransparent’, it is focusing our resources where we they are of most use to protect clean athletes. If you are going to share your opinion that we are not transparent, I do hope that you also provide your readers with the appropriate links to the information and data we provide on our website.”

In looking across the academic literature I can find no published example of scholars using WADA or USADA testing data to evaluate the prevalence of doping among athletes or to assess the effectiveness of anti-doping programs. This is pretty good evidence in support of my claim that the data that they do release, however useful it is internally, does not really enable an exploration of questions fundamental to any rigorous policy evaluation.

So we collected data available on the USADA website to see if we could perform a simple analysis of the prevalence of doping. We collected data USADA reported on the number of elite athletes that it tested and the sanctions that resulted from these tests.

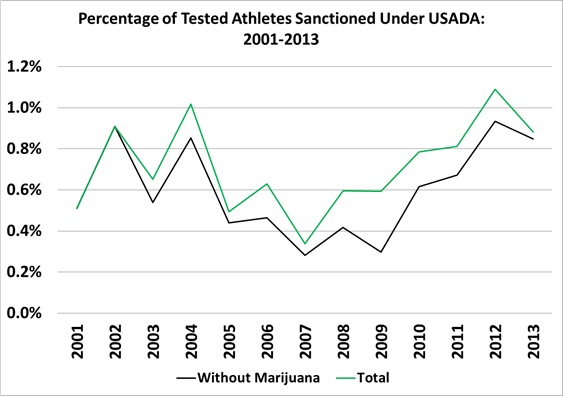

That data is shown in the figure below. In any given year, the number of US athletes in Olympic, Paralympic, Pan American and Para-Pan American sport who are sanctioned for doping, as a consequence of the testing program, is well under 1.0 per cent of those who are tested (remember, this is not the total number of relevant athletes). Also, note that drug tests are not given randomly, but “strategically.”

Article continues below (click to enlarge image)

I have only included sanctions that resulted from “adverse analytical findings,” meaning an adverse test result. I also have broken out sanctions for marijuana from the total. The data show that the number of athletes sanctioned from 2001 to 2013 almost doubled, and from 2007 to 2013 just about tripled.

Does this mean that doping incidence has doubled or tripled? That USADA’s testing program is twice or three times more effective? Unfortunately, we just can’t answer these questions.

The good news here is that just about everyone – athletes, anti-doping agencies, independent scholars – appears to have shared goals. Moving forward I am hopeful that the anti-doping agencies will help to better support researchers wanting to quantify the prevalence of doping and the effectiveness of anti-doping programs — no matter how uncomfortable the answers might be.

My essay in this week’s Nature can be found here.

.

Roger Pielke Jr. is a professor of environmental studies at the University of Colorado, where he also directs its Center for Science and technology Policy Research. He studies, teaches and writes about science, innovation, politics and sports. He has written for The New York Times, The Guardian, FiveThirtyEight, and The Wall Street Journal among many other places. He is thrilled to join Sportingintelligence as a regular contributor. Follow Roger on Twitter: @RogerPielkeJR and on his blog

.